What is predictive analytics?

Predictive analytics is a set of methods and technologies that can be used to analyze current and historical data to make predictions about future events. Predictive analytics includes a wide variety of mathematical modeling and computer science techniques with the common goal of using past events to indicate the probability or likelihood of a future event.

Predictive analytics is used across industry verticals but is most effective in industries that can deploy machine learning algorithms to process high volumes of relevant data.

Key takeaways

- Predictive analytics includes a wide variety of mathematical modeling and computer science techniques with the common goal of using past events to indicate the probability or likelihood of a future event.

- The goal of predictive analytics is to use current and historical data to create a model that describes behavior in an environment and predicts or anticipates future trends and patterns.

- Today’s industry-leading predictive analytics software tools use machine learning to develop predictive models. Machine learning is an application of artificial intelligence that emulates the human learning process.

- Sumo Logic’s cloud-native platform uses predictive analytics to help secure your cloud environment.

How does predictive analytics work?

The goal of predictive analytics is to use current and historical data to create a model that describes behavior in an environment and predicts or anticipates future trends and patterns. Some enterprise organizations have developed proprietary technologies for industry-specific predictive analytics, while others rely on third-party software tools for their predictive analytics capabilities.

Either way, the workflow for a successful predictive analytics initiative should be fairly consistent and can be represented by this seven-step process:

Project definition

The most important aspect of the project definition is understanding the goals of your predictive analysis. What are you trying to model? What questions would you like answered? What kinds of events or outcomes are you hoping to predict? These questions will help you understand how predictive analytics will drive value within your organization and determine how you configure your chosen software tool.

Data collection

Predictive analytics is most effective when you can leverage a large volume of data. If your organization already collects or generates data through its normal operating procedures, you will already have data from multiple sources available for analysis. If not, you may have to configure a data mining or data aggregation tool that can harvest data from your organization. Determining how to source data should be part of your project definition.

Data cleaning

Data that has been mined or aggregated has to be cleaned before it can be effectively analyzed. Data cleaning means consolidating data from multiple sources into a single database and ensuring that data is formatted consistently (in the same units, organized the same way) so that it can be efficiently analyzed or processed by your predictive analytics tool.

Deep data analysis

Once your organization has collected and cleaned a large volume of data, the next step is data analysis. The purpose of data analysis is to discover patterns and trends in the data and to use that information to create predictive models to anticipate future events. There are two general methods for conducting this type of data analysis:

Statistical regression methods

Traditionally, predictive modeling depended on mathematical and statistical methods of analyzing the relationship between some output variables of a system and one or more input variables. There are several types of statistical regression methods: linear regression, discrete choice modeling, logistic regression, and time series modeling, to name a few. Each option has its unique characteristics, including advantages, disadvantages and ideal use cases.

Machine learning techniques

Today’s industry-leading predictive analytics software tools use machine learning to develop predictive models. Machine learning is an application of artificial intelligence that emulates the human learning process. Machine learning algorithms process large amounts of “training data,” learning to predict dependent variables based on complex underlying relationships or even when the relationship between inputs and outputs is unknown. Neural networks, multilayer perceptron, and conditional probability models are among the technologies used by machine learning algorithms to generate more accurate predictive models.

Model construction

Once the available data has been thoroughly analyzed and processed, a predictive model can anticipate future events. Your predictive analytics tool may create more than one model, then evaluate them to see which one is the best (most accurate) for predicting future events.

Deployment

Once you have generated a useful predictive model, the next step is to deploy it into everyday use. Your definition of “everyday use” goes back to the project definition and initial goals. If your predictive analytics tool is capable of analyzing computer-generated event logs to detect security events, deployment could mean using the model to analyze data in real time and generating instant security threat reports to prevent data breaches. In some cases, you may even be able to resolve issues proactively by automating responses to predicted events.

Monitoring

Your organization should not rely entirely on predictive analytics to drive your interpretation of data. Predictive models should be continuously monitored and reviewed to ensure their effectiveness. New data can be integrated as it becomes available to help improve the model on an ongoing basis.

Who uses predictive analytics?

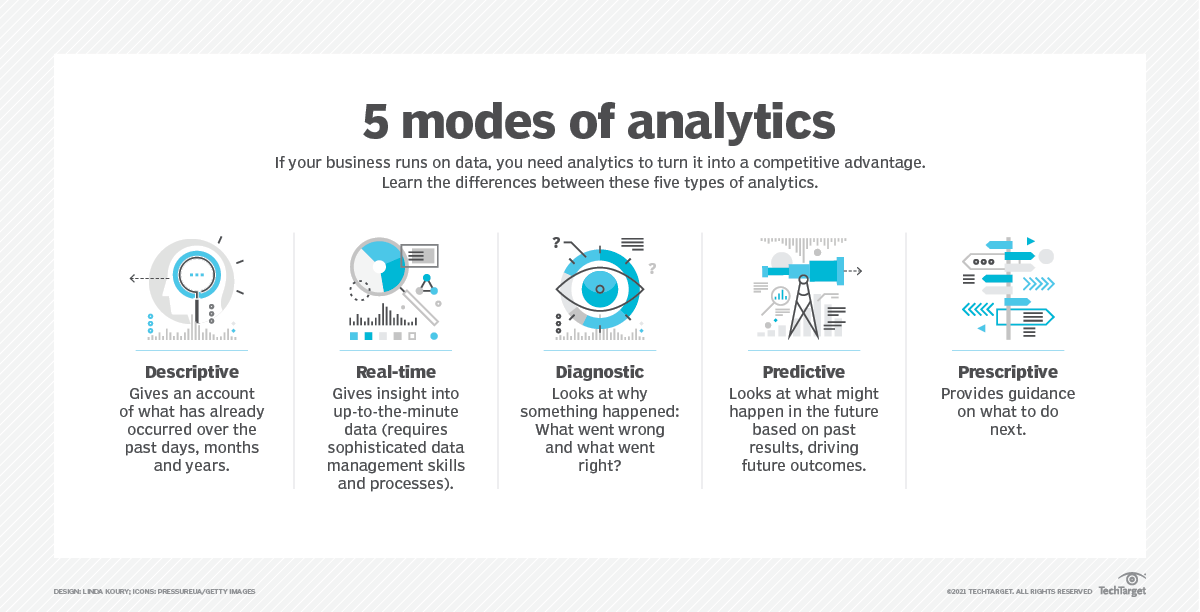

Image: TechTarget

Image: TechTarget

Predictive analytics is useful for any industry where it could be valuable to know what will happen in the future.

In the financial sector, banks use predictive analytics to detect credit card fraud, assess whether a loan should be extended to a specific applicant, or predict changes in asset prices.

Online retailers like Amazon use predictive analytics to identify up-sell and cross-sell opportunities. Their prediction engines use individualized customer data to display items that the customer is most likely to purchase.

Insurance companies also use predictive analytics to evaluate the risk associated with insuring a specific person or asset.

Sumo Logic uses predictive analytics to power cloud security

Sumo Logic’s cloud-native platform uses predictive analytics to help secure your cloud environment. Our tool automates the aggregation and data cleaning of event logs from throughout your cloud environments, then uses statistical methods, indexing, filtering and machine learning techniques to identify operational issues and security threats.

Sumo Logic enables a rapid incident response, streamlining root cause analysis and predicting future KPI violations and business needs before they negatively impact customers.